Within the office or stored on various laptops there is a wealth of information stored within downloaded PDF files. These could be academic papers, walkthroughs on workflows or previous site reports.

These files may be named appropriately (or not) meaning that the default windows explorer search can be used to find the document. But this assumes a legacy knowledge of the file and its contents.

With so many to search through, inconsistent names and the chance that it may not even be there, a search engine came up as a good idea to provide more accessibility and use from these dormant files.

Luckily for myself I had recently been watching a few videos online where people were creating their own search engines as side projects. One particular comment mentioned this would be good for indexing local knowledge and so this idea sat in my head until now where I hope to apply it to a digital library context.

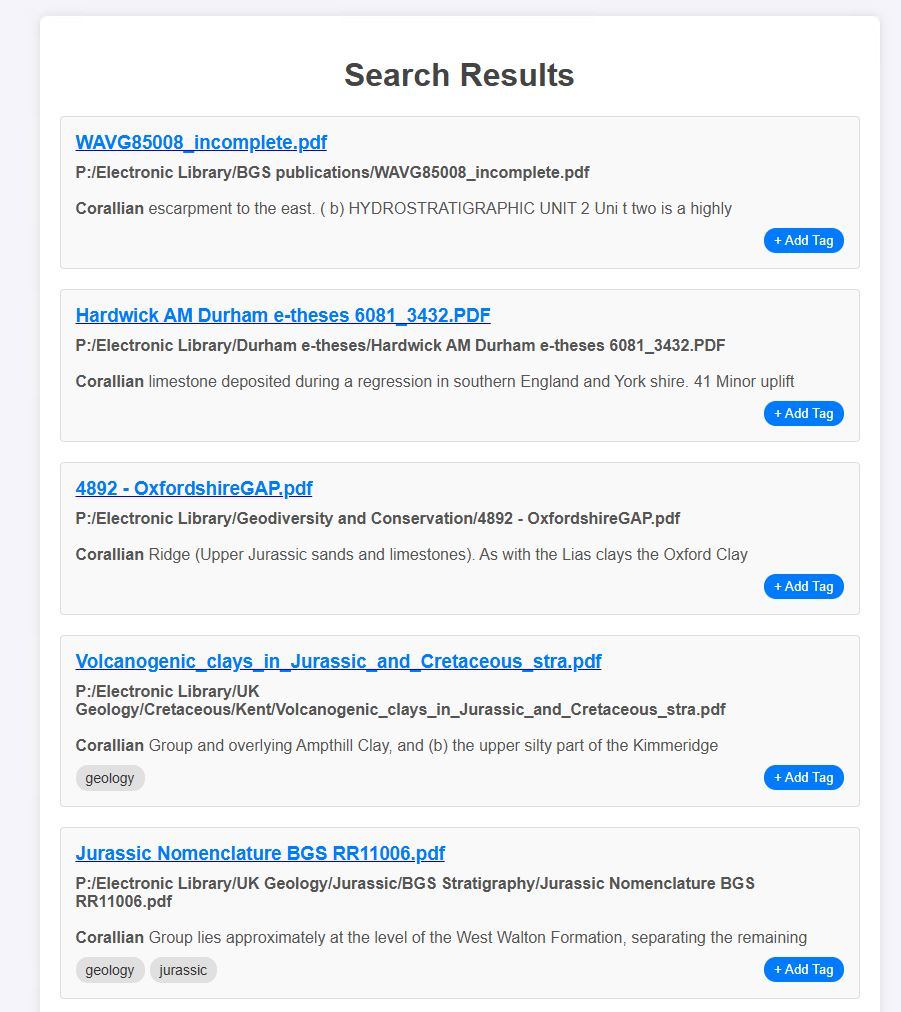

Lets say the user is looking to write a report about a site in which the Corallian geology is present. With the new search engine, the user can search up key terms, lithological units etc. and find out what information is already on the system.

This can lead to increased efficiencies in the data acquisition phase.

The first step was to figure out what PDF files we actually have on the system. For this some simple python was thrown together to walk through all the sub-directories of a given directory argument and return the path of each file ending in .pdf that it encounters.

Once this data was aqcuired, I decided upon using a Postgres database for storing the information. With this the first table was established with documentid and filepath.

We now needed to read the content for these files and extract the keywords. For this the python library PyPDF2 was used along with Psycopg for interacting with the Postgres database.

We would iterate the rows of the files table, use the filepath to locate the file and have PyPDF read the files using the built in extract_text(). This was attempted on each page and appended to an empty initialized string for each file.

For now, this extracted text was then pushed to a new table with columns file_id, content, keywords. Content and keywords took the same data, using Postgres' to_tsvector for the keywords to facilitate efficient searching of potentially large bodies of text.

With this data stored, we are now ready to provide a webserver to search for data.

Creating the search engine

I initially intended to use Flask for this project. In fact, the first version was written using Python into a simple flask server. Not long after seeing it work, I decided to run with Golang to try and speed up the apps performance. Overall Golang has a much higher performance due to its compiled nature. It is also much more suited for concurrency support.

By the end, the project was condensed into 3 main files:

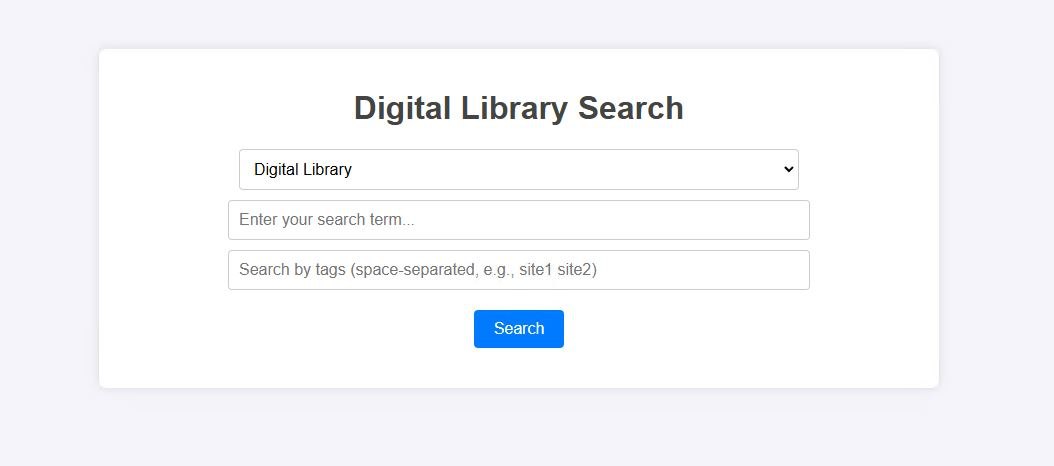

The pq library was used for interfacing Go with the Postgres database. The frontend was the next part to set up. Since I was setting up a library for two different network drive locations I used a dropdown to select which we will be searching within.

Below this is a simple input for key words or terms to search for.

After testing this for a bit, it was decided that the option to tag documents would be beneficial. For example, I may find a paper specifically about the geology within a certain area spatially. If I searched for this geology I would receive all mentions which may not be relevant to the location I am looking at. However, if I include the tag in my search then I will only return files with the keyword AND using the given tag(s).

The ability to tag can allow users more control to find what they are searching for and to split files into categories.

The search button then posts the form to the searchHandler function on the server which will read each part, and produce a SQL query to return the rows in the database and render these on the results.html endpoint.

Future ideas/plans

The entire project comes down to an end result of 3 files and 7 functions. The simplicity of the project allows it to be lightweight and provide fast results to the user.

Future intentions for the project include a weekly check for new PDF files, and to add these along with their content to the database. This could be done using windows task scheduler and a script to check if the files in the database are still at their valid paths. If not, we will remove that row and its data from the database. The script will then trawl the directories again looking for anything not in the database, creating a list before then pushing the files and their contents to the database.

In this way, the database will stay current with any changes or file movements. This will stop any annoyance of a user clicking their search result only to have it show up as a missing file.

The other plan is to increase the types of files we can include. Currently we are using PDF as we have PyPDF to read them easily. I intend to expand this on to .PPTX and .DOCX files in the future. This would expand the search engine to include potential lecture presentation slides in search results, making this useful in an academic context perhaps for students on their own systems.

In order to achieve this, Markitdown could be implemented. This tool recently released by Microsoft is used to convert a multitude of file formats to markdown .md files. The intention is for use with LLMs for data processing, however this will give us a plaintext readable file for our processing script to extract content from. This opens up new possibilities and also speeds up/simplifies the data collection process.

The markitdown tool can also iterate over the contents of zip files, allowing the possibility of including file archives in search results.

The keywords are lacking in the current iteration. All the content is assigned to keywords. I have played around with filtering out simple terms such as: the, a, as, and etc. but I believe it would be useful to have a dictonary to compare each keyword to as well as a list of words to exclude.

The final idea is to implement a geocoding of files. For this we can extract place names from the files and thus associate a spatial point(s) for the file. In my head I am seeing a GIS solution where a user can search keywords and receive the results as points on a map. The other idea is as a 'geo-helper'. This may be useful in a mineral safeguarding/exploration context. The idea is that the user will define an area such as a boundary or planning extent. The system can find any borehole records, geological records using publicly available datasets and so using this return the user a list of potentially relevant documents.

I plan to attempt to implement this idea in the future.